Guide to Data Deduplication

Editor’s Note: This post was originally published in June 2022. We’ve updated the content to reflect the latest information and best practices so you can stay up to date with the most relevant insights on the topic.

Duplicate data exists in every organization, wreaking havoc on reports, analytics, and dashboards. That’s why it is critical that companies prioritize the arduous task of deduplicating their data.

What Is Data Deduplication?

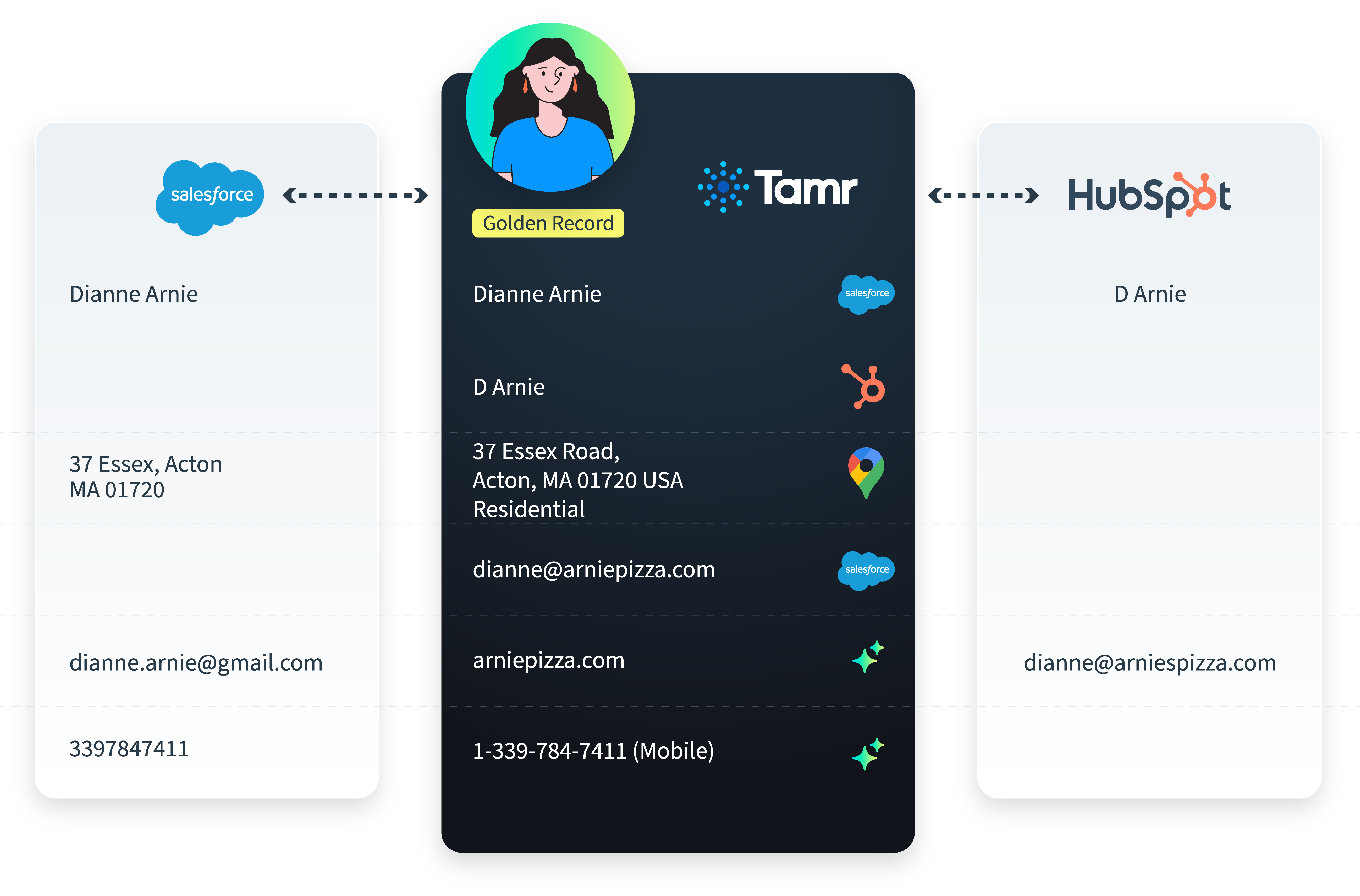

At the most basic level, data deduplication refers to the ongoing process of identifying and removing or combining redundant data to produce one unique instance of each entity—called a golden record—for use in analytics or operations.

Redundant or duplicate data can harm your business and your strategy in many ways, both in operational and analytical use cases. From an operational perspective, you can’t answer questions like “Which account is the right one to contact?” And from an analytics perspective, it’s difficult to answer questions like “Who are my top paying customers by revenue?”

Data deduplication overlaps with data unification, where the task is to ingest data from multiple systems, consolidate records, and clean them. It also overlaps with entity resolution, where the task is to identify the same entity across different data sources and data formats.

What Are the Benefits of Deduplication?

Data deduplication can benefit your business in a myriad of ways. For example, improved data quality and better customer data unification can lead to more (and greater) cost savings, more effective marketing campaigns, improved return on investment, better customer experiences, and more.

- Improved cost savings: This is the most obvious and direct benefit of data deduplication. Eliminating duplicate data helps save on data preparation and correction costs, sparing data analysts from spending as much as 80% of their time on arduous tasks such as data wrangling and transformation. Instead, they can focus on more valuable data analysis and strategies that directly support the business. Reducing duplicate data also helps reduce employee churn by enabling resources to spend time on strategic tasks that add value versus frequently fixing bad data.

- More accurate analytics: Duplicate data distorts a company’s visibility into its customer base, leading to inaccurate or misleading insights. For example, deduplicating data helps teams feel confident that they have the most accurate data related to customer revenue, which, in turn, improves the overall quality of revenue analytics and projections.

- Better customer experiences: Duplicate data can cause companies to focus on the wrong targets, and even worse, to erroneously contact the same person multiple times. By eliminating duplicate data, the customer success team gains a clean, holistic view of their customers so they can provide the best customer experience possible.

When Is Data Deduplication Used?

Every business has experienced the headaches caused by duplicate data. From customer records being created multiple times across systems, employees manually entering data incorrectly, or data imports from outside sources, there are certain patterns that create duplicate data. Ideally, you would want to prevent duplicate creation at the point of entry with real-time, fuzzy search APIs. After all, once the redundant data enters your systems, it can be quite difficult to get rid of it.

Data deduplication can help overcome repetitive data caused by the situations below.

- Different expressions: One of the most common causes of duplicate data in databases and operating systems is standard terms expressed in different ways. Take Tamr Inc. and Tamr Incorporated as an example. A human can look at the two records and know instantly that they’re referring to the same company. But databases and operating systems will treat them as if they are two distinct records. The same problem happens with job titles as well: VP, V.P., and Vice President are good examples.

- Nicknames (short names): People are often known by multiple names, such as a more casual version of their first name, a nickname, or simply initials. For example, someone named Andrew John Wyatt might be known as Andy Wyatt, A.J. Wyatt, or Andy J. Wyatt. In all cases, these name variations can easily create duplicate records in systems like your CRM.

- Typos (“fat fingers”): Whenever humans are responsible for inputting data, it’s inevitable that you’ll have data quality issues. In fact, the error rate for human data entry ranges from 1%-4%, often caused by issues such as misspelled company names (“Gooogle” or “Amason”) or misentered contact names (“Thomas” entered as “Tomas”). In both cases, they will create duplicate records.

- Titles and suffixes: Contact data may include a title or a suffix, and when entered incorrectly, those can cause duplicate data as well. A person called Dr. Andrew Wyatt and a person called Andrew Wyatt could appear as separate records and live in different data systems, even though they are the same person.

- Website/URLs: Inconsistent formatting of website URLs is another common issue. For example, some fields may contain “https://” or “www” while others may not. Furthermore, different records might have different top-level domains, such as amazon.com vs. amazon.co.uk. All of these differences will cause duplicate data.

- Number formats: The most common number formats that cause duplicate data are phone numbers and dates. There are many ways to format a phone number. For example, 1234567890, 123-456-7890, (123) 456-7890, and 1-123-456-7890. In the case of dates, there are also many ways to represent them. For example, 20220607, 06/07/2022, and 2022-6-7. Number fields are also prone to typos and other issues, causing different representations of the same value.

- Partial matches: This is one of the more complex issues and one that traditional rules or simple match algorithms can’t easily resolve. In the case of partial matches, the records share similarities with each other but are not exactly the same entity. Take Harvard University, Harvard Business School, and Harvard Business Review Publishing as examples. From an affiliated organization perspective, they are all affiliated with Harvard University. But from a mail delivery perspective, they would be separate and distinct entities.

- Redundant entries: This occurs when people enter perfectly accurate records multiple times into a single system (a CRM, for example) or multiple systems (e.g., multiple CRM instances) because nothing exists to prevent them from doing so, creating duplication within the source system and across source systems.

Clearly, there are many causes of duplicate records in data systems, and any given database will usually reflect a combination of them. That’s why when you go through the process to deduplicate your data, you need to consider many—if not all—of these factors.

How Data Deduplication Works

In its simplest form, data deduplication is the process of ensuring only a single copy of truth, or “golden record,” is used for analytics or operations, a core aspect of a master data management (MDM) system. While there are traditional approaches to dealing with data deduplication—including data standardization, the use of external IDs, and fuzzy matching with rules—these approaches only partially solve the problem. The best solution available to help solve the problem, and do so in conjunction with entity resolution and data unification, is AI-native MDM.

AI-native MDM provides a better way to eliminate duplicates so you can deliver the clean, trustworthy data your organization needs to drive business decisions and achieve business goals. The best MDM solutions not only embed AI at the core, but they also drive data quality improvement by providing the following capabilities:

- AI-driven entity resolution: Reconciliation of multiple, disparate datasets by using AI to detect and match records that are the same entity, such as a person or business

- AI/ML mastering: Human feedback combined with pre-trained machine learning models and semantic comparison with large language models (LLMs)

- Data enrichment: Referential matching paired with a reference database of trustworthy, third-party sources, linked together with unique, persistent IDs

- Data quality: Insights into missing, incomplete, incorrect, and duplicative data

- Real-time APIs: Instant access to a mastered view of every entity that matters to the business

How to Prevent Duplicate Data

Modern data ecosystems have the ability to simultaneously process data in both batch and streaming modes. And this needs to occur not only from source to consumption but also back to the source, which is often an operational system (or systems) itself.

Having the ability to read and write in real time prevents users from creating bad data in the first place. For example, real-time reading can enable autocomplete functions to block errors at the point of entry—while the data is still in motion. And real-time writing through MDM services and match index can share good data back to the source systems. With real-time reading and writing, it’s easy to identify a duplicate and block it from entering the database, merge it with an existing record automatically, or send it to a data steward for review.

AI-native MDM has the modern capabilities that organizations need to deal with duplicate data issues and solve the problem in a way that is more efficient and effective than traditional, rules-based approaches.

Duplicate data, along with many other pitfalls, is unavoidable in an environment where data grows exponentially each day. But when you use AI-native MDM, deduping your data becomes much easier.

Discover how Tamr’s AI-native MDM delivers the clean, deduplicated golden records your business needs to succeed. Download our ebook, “Golden Records 2.0: The AI-Native MDM Advantage,” to learn more.

Get a free, no-obligation 30-minute demo of Tamr.

Discover how our AI-native MDM solution can help you master your data with ease!