Introducing Tamr’s New Curator Hub & Vision for Agentic Data Curation

In the age of AI, clean, curated data isn’t a “nice to have”—it's a critical requirement. This gap between messy reality and trusted data foundation prevents organizations from realizing the true potential of their analytical, operational, and GenAI initiatives.

Join Tamr experts for an exclusive first look at Tamr’s Curator Hub functionality and the unveiling of a new, transformative concept: Agentic Data Curation. Discover Tamr's vision for an Agentic AI future where specialized, intelligent agents work alongside your data team, acting as powerful assistants to automate routine tasks, provide contextual insights, and accelerate the delivery of trustworthy data.

Hear from our expert speakers:

What you’ll learn:

- What is Tamr's vision for AI agent-enabled data teams

- How Agentic Data Curation can solve the "last mile" of data mastering challenges

- What Tamr’s new Curator Hub does and how it serves as the mission control center that puts the power of human-in-the-loop agentic workflows into your data stewards' hands.

- How Tamr's flexible architecture allows you to leverage pre-built agent libraries and even "Bring Your Own Agent" to inject custom business logic into your data mastering efforts

In the age of AI, clean, curated data isn’t a “nice to have”—it's a critical requirement. This gap between messy reality and trusted data foundation prevents organizations from realizing the true potential of their analytical, operational, and GenAI initiatives.

Join Tamr experts for an exclusive first look at Tamr’s Curator Hub functionality and the unveiling of a new, transformative concept: Agentic Data Curation. Discover Tamr's vision for an Agentic AI future where specialized, intelligent agents work alongside your data team, acting as powerful assistants to automate routine tasks, provide contextual insights, and accelerate the delivery of trustworthy data.

Hear from our expert speakers:

What you’ll learn:

- What is Tamr's vision for AI agent-enabled data teams

- How Agentic Data Curation can solve the "last mile" of data mastering challenges

- What Tamr’s new Curator Hub does and how it serves as the mission control center that puts the power of human-in-the-loop agentic workflows into your data stewards' hands.

- How Tamr's flexible architecture allows you to leverage pre-built agent libraries and even "Bring Your Own Agent" to inject custom business logic into your data mastering efforts

Watch Webinar!

Want to read the transcript? Dive right in.

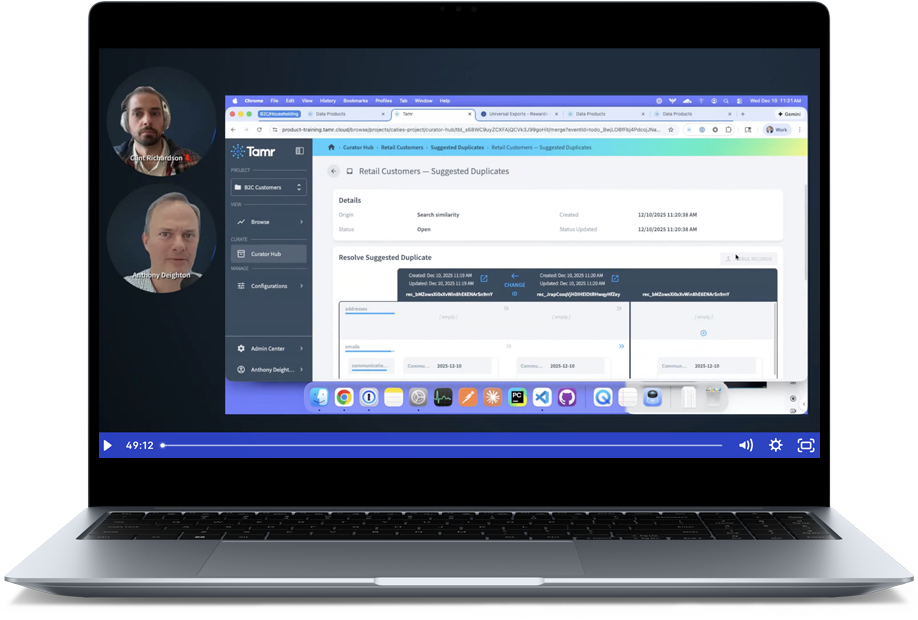

Alright. Perfect. Alright. Good morning, good evening, good afternoon, everyone. Thank you so much for joining today's session, introducing Tamr's new Curator Hub and our vision for AgenTic data curation.

Before we begin, I'd like to cover just a few housekeeping items.

Closed captioning is available. Simply hover over the virtual stage and click the CC button located at the bottom of your screen. We also encourage you to submit your questions leveraging the q and a tab in the engagement panel on your right. And we'll do our best to answer your questions at the end of the presentation.

And if we are unable to get to your question, we'll follow-up with you directly. We've also added some additional resources which are available in the docs tab in the engagement panel on your right. There, you can find some additional related content. And today's webinar will be available on demand after we wrap.

It will be sent to you via email directly. And then lastly, as we head into the demo of the program today, some of the content may be small or hard to read. To help, you can hover over the bottom of your screen to maximize your window to full screen or leverage the plus and minus icons to zoom in or out.

And our esteemed speakers for today's session are Anthony Dayton, chief executive officer here at Tamer, and Clint Richardson, head of data product management at Tamer. So welcome to you both, and I'm gonna pass it over to you, Anthony.

Awesome. Thank you so much, and welcome, everyone.

So let's let's jump into it. We're gonna go over a bunch of stuff today. I'm super excited to share both some slides and some real running software. So we're gonna see some really cool demos and walk through some stuff.

So we'll talk a little bit about overall where Tamer's focus, what our strategy and vision are. We'll do two different demos, both introducing Curator Hub as a general idea and then digging in specifically into the agentic curation concept.

And lastly, we'll finish and share some of the work we're doing around bring your own agent, which is super exciting.

So just to sort of step back a little bit and set a bit of context for the overall content that we're sharing today, I thought it'd be useful just to frame where we stand as an organization in terms of, you know, our strategy and and vision. And in a way, almost nothing here has changed in the many years that we've been working on the Tamer project. We've always had this core idea that businesses run better when they have access to clean, up to date, organized data, and that the core challenge in the enterprise today isn't access to data, isn't visualization and how to get access to the data, but rather it's the actual data itself that most people complain not about the quality of their dashboards or their analysis, but in fact, they look at the underlying data and think to themselves, gee, this data doesn't seem right.

And they're looking for ways to solve that problem. And this challenge, in my view, has become even more acute in today's kind of AI forward world. So as we start to deploy these AI strategies, and many of you on this call, am sure, as an organization, are thinking about how to leverage AI, how to build and train models, how to deploy large language models, how to deploy chat interfaces into your data and organization. When you build these things on top of messy data, it means that the agents themselves and the AI systems are working off either siloed views of your data or incomplete and incorrect data.

And while a human might pause and say, this doesn't seem right. I need to sort of think about it.

The AI may not, and it simply can in a way, you can sort of scale the worst possible outcomes that you can imagine.

And this is particularly true in this new agentic world where we're turning over processing and work to AI systems. We're letting them actually move, change, update, and interact with the data when they don't have access to clean data or the data they're viewing is only a small sliver or silo of the possible data, that can lead to a lot of challenges. And we view this as the major challenge for enterprise software going into the future.

And this isn't a new problem. People have been thinking about how to organize and clean up enterprise data for a long time. We've often referred to this as master data management. But master data management, you know, you know, my is generally been a failure as a category.

If you're a new hire and you start your new job and you're told you get to be in charge of MDM, historically, that was viewed as like, oh my gosh. You know, this is gonna be a long project. It's gonna take a long time, and it's not likely to be successful. Why is that?

Well, there are two primary strategies we've seen organizations take when they approach the MDM problem.

The first is that they view it primarily as a people problem. So the idea here is we're gonna throw people at the problem of a team of people, and they're gonna kind of walk through record by record and try to improve the quality of the data. Oftentimes, MDM systems that you that are deployed that use this approach are really workflow engines for adjudicating or approving changes to master data.

The second strategy that we see is that they view the problem as primarily a rules problem, that what we should do is take the master data, stick it into a system, and kinda lock it down, make sure no one can change it, put in place a whole series of confusing, overlapping, and conflicting rules that try to make sure that nothing ever happens to that data.

Our view is that neither of these approaches alone is a good strategy. And the reason is that the fundamental problem of organizing enterprise data is a scale problem. And this goes back to the original academic research that literally founded Tamer, this idea that this is what we call in computer science an n squared problem, that the challenge is one that goes up with the with the square of the underlying data, and that you're just never gonna hire enough people or write enough rules to solve the problem.

And that the only way to get a handle on master data is to deploy an AI native approach.

This is, in fact, the only mechanism for solving the problem.

This unlocks, in my view, a couple of important capabilities, and these are things you will both learn about today and will, in fact, show you. So the first is this idea of curation at scale. Because the underlying system is an AI native system and is probabilistic by nature, it means that when the system is unsure, it can escalate for human feedback and intervention. So humans can be in the loop of these AI systems in a way which is very different than a rules based system.

Rules are binary. They're either sort of true or false.

Probabilistic systems, by their nature, are continuous. And so we can find the cases where the AI is, you know, what we would say is on the decision boundary of the model where the AI is the least certain and escalate those to humans.

And because we can now organize data much more rapidly at a reasonable cost, it means that we can unlock and organize more entities, and we can link those entities together into related or relationships between and within entities, something, again, we'll show you in a few minutes.

Fourth, when we've organized the data into this enterprise knowledge graph, we can then surface that through interfaces like MCP into large language models. So as we talked about a bit before, now the large language model has all of the knowledge of your enterprise. Knows everything about all of the data, how it's organized, how it's related, how it's linked, and that gives context to those modern AI models, which then unlocks the fourth capabilities. When the models go to take action.

Now they have a key to sort of walk through that data those data and kinda know where they need to take action. Where is this data stored? What systems is it in? What's the, you know, key you know, how do I, you know, find that particular record when I wanna go and make an update or a change?

So this is, in our view, the the reason that the only way to solve the MDM problem is using an AI native approach.

And what that provides is a system that looks like this, messy data on the left, working through the tamer system. We can bring that data together, and we can deliver it against a couple of key use cases.

The first are analytical use cases. So we can simply make your dashboards more accurate. All of a sudden, they go from being incomplete and siloed to being complete and give a complete view of the business. The second is we can consume this data directly, literally as a three sixty page or through these common AI endpoints like chat interfaces.

And last, by surfacing it through a series of APIs, we can tie it into your operational processes so that every time data changes, downstream and upstream systems are notified of those changes.

So as I indicated, you know, our view is that humans alone or rules alone can't solve the problem, but it's also true and fair that AI alone can't solve the problem. So our view is that there's really a balanced approach across all three strategies. Yes. We wanna take advantage of AI because that's the way we can actually get a handle on the problem because it's a scale problem.

But there are cases where simple rules are both valuable and, frankly, very quick to implement.

And and also, there are cases where we want human intervention. We want humans to come in and and make a decision. The key here, however, is to use the AI on most the vast majority of the cases. Because in the vast majority of the cases, the model can do as good or better a job.

And, of course, the good part about models is they don't eat, sleep. They work all weekend. They walk work all night. They're highly paralyzable.

And so we can actually take we can we can solve the scale problem. And then we can focus human attention on the cases where they're most valuable, and we can have rules where the rule is sufficient.

Again, the challenge with the rule system isn't rules in themselves. It's where the rules are implemented in the system. In a traditional rule system, you start with a blank slate, and you're building rules up, trying to build enough rules to to solve the problem.

With an AI native approach, we start with a set of decision trees. And then one way to think about this conceptually is that we we encode in the system every possible rule that you could have written, and we simply find the path through that tree that is the best rule in that moment. And this also allows the system to be explainable because we can we can always return back to the user, to you, which path we took through those decision trees. But it also means that you begin with a complete solution. We don't start with a blank slate and ask you to write rules. We start with a working model and let you work from that to sort of tune and train updates that are necessary for your particular environment.

And so last slide, just to sort of bring this all together, it's our view that we're now at a time where we can, in fact, use AI to do basic data management tasks. And this can be the core and the foundation of a modern MDM system, AI at the core with enough rules and human curation where needed. And in a way, that's, in a, you know, very literal sense, what we wanna show you today. We're gonna show you how this all comes together, and then we're gonna talk about what we're doing around agentic curation, how we can bring AI into the curation process itself, and how you can actually build and train your own agents to do that work. So let me start with a very basic operational flow that introduces some of the core concepts, and then Clint's gonna jump in and take us to the next level. So to start the demo experience, I wanna start, in a way, at the end.

I wanna start with a customer of our fictitious demo organization named Diane Burney.

And Diane is like, you know, any customer here in our Tamer MDM system. And she's been given a Tamer ID so we can always find her in the system. We have all of the best information we need about Diane right here together in our three sixty page, And we have visibility into where Diane sits in all the underlying source systems. So you can see that there are, in fact, two sources with information about Diane, five source records.

And you can see in here all of the you know, this is from Data Extract Live and Data Hub Live, clearly some demo systems. But you can see where the primary keys are in those underlying systems, and you can also get a good sense for the variation in the data that sits in those underlying systems. And so to my earlier point, when an AI agent needs to know who's Diane Bernie, They have access to this tamer ID, and they know what source systems they can traverse to go find all of her information.

Now Diane is also a member of a household, and you can see that she has been assigned a household ID. And if I open that up, you can see everything about this household. This household, in fact, has two household members and and location. So Sophie and Diane are together in this household. This is gonna be important because what we're gonna do, introduce a new player to the system. And so let's let's do that. So imagine that Diane Burney has a family member, another family member, and but we clearly don't know who she is yet.

And like like many people, she is gonna go and register on a website to, in this case, get access to a coupon. Her name is Tracy Burney, and here's her email address. And she's come on to this website. She's registered.

She's given her phone number. Now, like many customers who come on to our website, she's filling out the minimum set of information necessary to to complete the form so she can get her discount. And when she gets her discount, we put a record into the underlying operational system, and we update Tamer with that information. And because this is a demo, I can sort of lift the veils on what happened behind the scenes.

We can see the Tamer API response here, and you can see we've created a new record for this person named Tracy Burney, and she's been given a a tamer ID. And we can go back and find Tracy in the underlying system. So we can go take that tamer ID and find Tracy in the system. There's Tracy.

Now we don't know very much about Tracy because she's just given us an email address and a phone number.

But let's see if we can sort of build up a more complete picture of Tracy as a as a customer. So like many customers, Tracy is gonna come and register on this website again. Now this could be Tracy registering a second time on the website, or it could be that she walked into a retail location and she shared her information with a retail employee or maybe in a CRM system. But just to simulate that here, Tracy's come on.

Now you'll notice that Tracy has a slightly different spelling of her name. She's used a different email address. So it's a little unclear whether this is the same Tracy Bernie that we were talking about before. And in a good way, that's exactly what Tamer figures out.

Because in the new Curator Hub, when the new record for this second Tracy Burney comes in, it's added to a queue of suggested duplicates.

So these are records which didn't quite hit the threshold for the model to say, yes. These are in fact the same person.

But it you know, and didn't hit the threshold to say, no. They're definitely not the same person. We've we want or ask that a human come in and give us some feedback on this. And we present all of the necessary information here on the screen.

So we can see everything here. You can see the original email address, the new one that she used, and you can see what will happen when we bring these two records together, what the final golden record will be. And so now as a human, I can apply my intelligence into the system and say, yeah. In fact, these are the same person.

So I'm gonna bring these two records together and have a singular view of Tracy Burney as a as a customer. And so here she is, the new Tracy Burney with her updated information.

And importantly, we also have a full history of everything that's happened. So you can see and this is, of course, a live demo. So here is the original creation. The Tamer web API created the very first Tracy Burney record, and then me, myself, and I, I personally, came in and gave my human feedback into the system to bring these two records together.

And now we're not done yet, of course, because Tracy's gonna come a third time into the system, and she's gonna register a third time. And we're gonna get a third swing at the bat. Now this time, mercifully, Tracy doesn't totally spell everything correctly. She uses Mass Ave instead of Massachusetts Avenue, which is the correct spelling.

And what you'll see is when that record comes into the system, first of all, we immediately update Tamer with the address information. So now we have a nice map because we know where she lives.

And if we go back to the history of this record, if I scroll down to the bottom, in the record history, you will see a couple of important things have happened. So first, we had this update where we brought in the new address. But behind the scenes, Tamer picked up that and noticed that change in the record and then, in fact, updated and normalized and corrected that address. And in doing so, something really important happened.

We were able to identify that Tracy is a member of that household that we were talking about earlier with Diane and the other person. We'll find out her name in a moment.

And so we can go take a look at that household.

So here's that household. Before, it had just the two members in the household, Tracy and Sophie. There we go. Remembered her name.

And now we see we've in fact brought Diane in. And this is an example of building the relationships or the network of relationships inside your master data, your entity data. Here, we have a relationship that we've mapped between these three members of the household, all that all really are together in the same household. You can see that we've brought this together. We have a complete history of all of the changes that got us here. And importantly, this three sixty page now references and knows a lot more about Tracy because we've brought her into this household relationship. We can see what household members she's a part of, etcetera.

So in this way, we have tied the MDM system into these operational processes. In real time, we've brought these data together. When we needed human intervention, we've surfaced that into the Curator Hub, given people a way to provide that feedback in real time, kept track of all of the changes in the underlying data.

And when the system is, you know, when the system is certain in the in the third case, we updated with the address information behind the scenes. We enriched it, and that allowed us to bring it together into this household relationship.

So with that, let me turn the screen over to Clint who can share where we're going with all this.

Thanks, Anthony. So I will, lean into that part of the story around, when when the agents can't figure it out itself, escalate to humans, but also a part of that of we showed how the agents can sort of be that screen for your data as it comes into the system real time, but also a way that they can help behind the scenes as as you go about your day and then and then put a suggestion in front of you in Curator Hub.

So, like we, just saw, Curator Hub is our homepage for, the new curation experience inside of Tamer. It's a place where, your human curators can collaborate with each other or with automatic processes. And also, again, where AI agents behind the scenes can do, tasks for you and and put them there for you to review later.

And I'll talk about that with a demo.

And to anchor the story of this demo, I'm gonna play the part of a pretend company. So this is a this is a pretend company in the b to b sales space in tech. We're called Dataflow Solutions. We happen to be a Tamer customer.

That's gonna be helpful to us in a little bit. And we have, at Dataflow Solutions, we put out a new ebook, and we have a form on our website because we want people to get, download that ebook. And this is something that happens every day in our industry. And as a side note, we will be talking about, an ebook that we're just rolling out on agentic curation at the end of this.

But anyway, so here we have, our our website is data flow solutions. We have a form to get your your guide. And Al, Smith has come in and filled out the form. And and just like Tracy, Al has filled out only the things with an asterisk, and left everything else blank. So Al told us he works at Apex Clearing and he's the head of data. And that's potentially very exciting lead for us as as a company.

Unfortunately, though, Al told us nothing else about about his company that he works in, which is a problem because we happen to segment our our sales and marketing by, you know, geography and industry here at Dataflow Solutions. So while Al may be a really great lead, we don't know enough about him to action him, and and we'll see that, in a second. So so first, let's get him his guide.

Great. Al, is on his merry way with his with his, his guide.

And, again, like I said, Al is a or Dataflow Solutions is a Tamer customer. So we can see, Al Smith just showed up here inside of Tamer. Here he is.

Again, the head of data Apex Clearing. We we have his email. We probably trust his email. Right? Because he knows he's gonna, get it at or get the e guide, emailed to him. We have that integration hooked in with our marketing platform. So our our Marketo data guide leads, source piped Al in inside of Tamer as soon as he as soon as he filled pressed that button to get his guide.

But we already went ahead and created a corresponding company record for Apex Clearing and linked it, as an employee employer relationship.

And that's just all part of, us at Dataflow being a Tamr customer and having this operational integration that we can react to these these things, happening in real time and and reflect that data and those relationships inside of our MDM system.

The problem, though, is, again, we don't know anything about Apex Clearing. And we we guess that it's website from Al's email, but we don't know if it's actually the website.

And, we don't even technically know if it's a real company. And, normally, this is where the story stops. Right? Because we have to hope that someone comes in and finds this record and and cleans it up.

But with agents in Curator Hub, I can switch over and see a new suggested edit for Apex Clearing.

And what I'm seeing here on the left is that, original source information that we had. So Apex Clearing, and, again, the guest at the website.

And what I'm seeing what's on the right in green are the suggested updated values. So you can see a new, full company name, the correct website for Apex Clearing, which happens to be apex fintech solutions dot com.

An LEI number to identify that company. The fact that they're in Dallas, D U S number.

Phone number, the fact that they're in financial technology, a NAICS code corresponding to it.

And this is great because now I have enough information, if I if I commit these changes to go ahead and action the this record, and and I know who to give it to on my marketing or sales team to chase Al and see if he's actually a good lead.

Let me go ahead and show how we got here. So in the first place, what we did was try to answer the simple question of is Apex Clearing a real company? The the tool that the agent used for that is Tamer's firmographic search. So we, behind the scenes, have compiled a a corpus of company data across open source and private providers. And we, as your data runs through Tamer, we we always do this first question of, hey. Is it is this company record a real record? Let's let's go look them up in that source and just verify that they're real.

And we were able to find Apex Clearing. And because, Glythe is one of the sources that we bundle in, we're able to return an identifier for it. And that's exciting because then we can turn to another trusted provider. So it's having, taking the first step on the rung of data quality and saying, is this a real company?

Can you tell me something about them? Now we can take the next step by turning to another provider of trusted information, in this case, a DNB, and calling DUNS match and saying, D N S, here is a company name with an LEI. What do you know about them? And it turns out they know exactly who we're talking about.

Right? So they can return us, that, fuller company name. They can return us the D U N S number. They can tell us that the, you know, the registered address is three fifty North Saint Paul Street, street thirteen hundred.

And now we're starting to really fill out, the picture of of who Apex Clearing is.

And just like Anthony showed with, address enrichment when an address showed up, now that we have an address, the next tool that the agent called was, our our address enrichment services. So it's standardizing the address, parsing the, street address into its relevant components, adding lat long information.

And and now at this point, what we've done is we've solved the problem of of entity resolution.

If this is a real company, who they are, where they are, and we can then, engage LLMs even more directly. And so to be clear, we're not saying, hey, LLM, here's Apex Clearing, you know, and is this a is this a hot lead? We're saying, LLM, here's Apex Clearing with these critical identifiers, and they're in Dallas. What can you tell me about them?

And because it can reason, in the right context here of of not solving identity resolution, but instead, here's a known entity. Tell me what else you know about them. It can come up with really helpful information, like the fact that Apex Clearing is a financial technology company, what its website is, that it's a private company, and and what its LinkedIn URL is.

And so through, this combination of of tools, and we can start with a very sparse record and end with a record that we can actually, action for our business and and use to to to power our business again. And so that's exciting, and I wanna use that as a launch pad for not just how Tamer's agents, which we'll we'll talk about, but how you can also bring your own agent into Tamer with our architecture.

This is a slide with a lots of lots of boxes on it. The important parts are that the blue boxes are tamer's architecture, and the gray boxes are boxes that our customers bring.

And everything, appropriately at the center is the real time system of record. Everything orients around that. That's that operational data layer, with which you interact using our APIs, and also, that to which you can subscribe to data change events. So you can create a webhook inside of Tamer using our UI, and we will and that creates a subscription to essentially a pub sub queue of data change events that are happening inside of your MDM system.

Now what's exciting about that is you can react in real time to Azure data, changes. So you can pipe these data, to CRMs, for example, to Salesforce. You can have an integration where data chain up updates in Teamwork immediately get reflected back in Salesforce or HubSpot.

And that architecture is, at its core, sort of what we saw in, the demo today, where our road map is oriented around prebuilt library of agents and tools. So you can see, what happened is, we had a API call come in to create a record in in the store. We had an orchestration agent reacts to that and use a set of tools to try to create a record that we could actually action.

And Anthony showed earlier, we have also sort of at the front door, these synchronous agents that are keeping your data and suggesting duplicates as you're using the system. So we have these two different ways of tying in agents here.

What our road map again is oriented around is, those two things. So real time entity resolution and asynchronous data quality. And it'll leverage, a set of tools that exist today and that will continue to grow around how to fill in the gaps in your data, tailored to your business problem.

But because you can create webhooks, inside of Tamr, you too can react to data changes, and bring your own agents.

And it's relatively simple. It looks like this. It's a four step process.

So, step one is the same as in the demo. You create an update or update records, in Tamer using our APIs.

Step two is the trigger is sent to that webhook that you've configured inside of Tamer.

Step three is that, your agent reacts to that, data, and that can look like custom lead scoring, or you can have a support agent reacts to, a person joining a household or someone's personal information changing. Or you can have a fraud detection agent react to a a business' identifier changing and, like, an LAI changing or something like that or duplicates coming in. You can have your agents react to all of the things that are happening in Tamer.

And then those agents can respond to that and put it back inside your MDM system of tamer using our APIs. And they can do that either directly to update data, or they can, put it in front of a human to review. So you have, the capability here not just to let your agents run away with your data, but also to have a human involved in the loop here so that the human has the final say.

And this is, what we think is is really powerful because it's a pretty simple way to take advantage of all of the data science engineering and and AI expertise that you have in your org and tie it to your MDM system so that these things don't have to live separate from each other, but instead can can benefit from it, from each other.

And that's how we think about modern MDM where we will own the core MDM problem. So entity resolution, data quality, how do we produce those trusted golden records for you to run your business on? And those are tied into operational systems.

And, also, it's extensible. It's extensible that you can bring your own agents, to inject your business record your business logic into the, MDM process.

And that way, again, you can you can not just, trust your data even more because you're doing the important custom logic to you and reflecting that in your golden records. You're also driving value off of it because you can tie it to those business workflows.

And, with that, I promised, we would mention an ebook, and so I will turn it over to Anthony to, talk about or sorry. Two key takeaways. Yes. Operational MDM continues to be easier to achieve with with Tamer like we saw today, tying in our APIs and reacting to them are very simple. And, the next generation of MDM capabilities looks like, prebuilt agents and then also agents that that you can bring to the table and and very easily, tie into your into Tamer. And so with that, I'll finally turn it over to Anthony to talk about, the ebook that we, just rolled out.

Sure. So thanks, Glenn. There are two QR codes on the screen. You can grab your phone and and scan them.

One, if you'd like to have a a more detailed personal demo that's more directly relevant to your use cases, go ahead and set some time.

Or and there is a ebook which really goes over everything we covered today and more in a bunch of detail and talks about not only where we are today, but where we're investing and going in the future, which I would highly recommend. A really thoughtful piece on the future of agentic curation. So you can scan the second QR code and grab that. Now I am confident that this presentation listed all kinds of questions and comments, and there were there was at least one in the q and a panel that I already answered, but I'm happy to go over that again.

Or Tatiana or or Yeah.

Or sorry, Kara.

I'm here.

Yeah. Go ahead.

No worries. Thank you both again. So everybody joining us, if you wanna stay on, we are going to shift into some live q and a. And if you haven't already, feel free to submit your questions. We'll try to get through as many as we can.

So first one, we had the following question from Carl, which Anthony responded to in the q and a tab, but it might be worth elaborating on a bit here. So our source systems, for example, CRM, updated also when data is corrected automatically in Tamer?

Well, clearly, I've already given my thoughts on this. So, Clint, what do you think?

Yeah. It's so the short answer is yes. So there are are ways to do that. And the the primary way to do it in real time is via those webhooks that I mentioned. So that looks like coming into Tamr and telling us about a a rest endpoint to consume those creation, deletion, update events. And then having that rest endpoint integrated with your CRM system to to update the data in your CRM.

You can also, as Anthony said, do this sort of nightly in a batch style mode of, you know, publishing out a snapshot of your data at the end of the day and and integrating that. But those are the two patterns. And so that's the slightly longer answer of yes.

Great. Next question from Colin. This is obviously powerful in a commercial customer focused environment. Have you considered the complexities of possible use in noncommercial and highly complex data environments such as health care?

Sure. So, I mean, our view is that wherever data is critical to business operations or to operations, whether they're business or not, there's an opportunity in this context. So my experience as a consumer of health care and as a general observer of the industry is that there often is a lot of complexity in the underlying data.

Doctors, for example, have admitting privileges across many different hospitals. They often have offices in one place and take you know, and they do rounds at another just as two very simple examples.

Let's not even talk about health care billing and and those sorts of questions. So there's a tremendous opportunity to think about these kinds of capabilities in an industry such as that. I'd also point, for example, to government, which is clearly not a commercial industry, where there is both large volumes of data and a lot of complexity in that underlying data. We have customers across both of those and and, obviously, many other industries as well. So, you know, I think I would think about it more in the context of where messy, unorganized, incomplete data is a hindrance to achieving the objectives your organization has, whether those are commercial objectives or whether it be humanitarian or government governmental or, you know, whatever, achieving those objectives.

Okay. That's also in case some of the complexities, Colin, you're talking about are, you know, data privacy regulations and the ability, for example, to say, like PHI versus PII being in different systems.

One of the main benefits to having an MDM in that environment is that you have that central ID. So that you can link records across systems with that ID without needing to share PHI across systems.

And that's also why we're so excited about this bring your own agent architecture where if you have something you've built that's running in a regulated environment, you can send updates, relevant updates about records to that regulated environment, have it be processed there, and only put the necessary pieces back into tamer. And that doesn't even necessarily need to look like updating things at Tamar other than, you know, saying sort of it's we've checked this record the way we need to. But the point is that the ease of integrating with all those spaces also is a helpful way to navigate those complex landscapes that you mentioned.

Wonderful. Thank you both.

Next question from Kyle. You mentioned lead scoring. Can you give more details on how curated data may impact lead scoring, ABM segmentation, or AI generated recommendations?

Any best practices for treating curated data differently inside activation systems like CRM, MAP, and ad platforms?

Since you used the example, I'm gonna be used to Yeah.

Yeah. Sure.

So I think the the point of the demo is that without a certain level of data quality, you can't do lead scoring. Right? And so or you can't do it effectively in any meaningful way.

And so there's sort of an obvious benefit of knowing enough to do at least scoring at all that we think comes from doing it on top of curated data. There's also, I guess maybe going to the best practices. I don't wanna tell you how to run your business in your marketing department, but what we want to enable is however you want to run your business in your marketing department, it's easy to have the latest and greatest data from Tamer to do that effectively.

And, I guess, if I were just recommending sort of the AB stuff, I think it's one segmenting your your data to to curate it or not and keeping track of essentially marketing attribution to the curation activity. And that actually ties into an idea that has been talked about a little bit in the space, but I don't I haven't seen it gain legs anywhere of tying business impact to data curation generally. And so, I think there is an opportunity for marketing leaders to work with their, whoever owns the MDM system, and tie some attribution to the fact that curation happened and let you effectively score those leads.

Thanks, Clint.

One more question from anonymous. There is a trend of MDM being an embedded capability on the data platform and supporting federated data curation. How does Tamer integrate with ISVs like Databricks and Snowflake?

Yeah. It's a great question. And from our view, I'd say a couple of things. One, often when you're using a system like Databricks or Snowflake, you end up with many different tables in a system like that that all represent the same underlying entity. You know, you may have ten, fifteen, twenty tables of customer data, and what you really wanna do is bring them together into a single view of the customer.

And the issue of the IDs, as we showed in the demo, is exactly the same. It's just you end up with multiple IDs, but in the same system, whether it be Databricks or Snowflake, not necessarily across systems.

So in that sense, we treat a system like that like any other.

The other piece I would I thought was interesting about the question is this idea of federated data curation. We didn't show this in the demo, but everything that we're doing from a curator hub perspective is also available through a set of APIs. And so you you could and can imagine a scheme by which people are doing curation, you know, outside of this interface, and we're just posting those curated events into the system.

So, again, we didn't show that in the demo, but but that's certainly possible. So if, for example, you wanted to go into Snowflake and build a Snowflake UI to curate the data, you could post those changes into Tamr, and that would be totally acceptable, and then frankly, probably a very cool way of doing it.

One of the most exciting points of to me, anyway, about what Anthony just said is that you can have federated feedback with centralized curation. You can source feedback on all on your records from wherever you want, but still maintain data governance processes for who can go into curator hub and press act the actual update button.

Great.

Couple more questions if you guys are okay with that. One more from anonymous. How do you envision human curators collaborating with AI agents in the Curator Hub? How will that work?

Clint, why don't Yeah. Yeah. I can start with that. So today, it's primarily through tasks. So with the agents creating tasks for for people to review.

Eventually, you know, we we hope to let our curators configure what those tasks are. So what what sort of tasks they want the agents to create for them.

And then also how agents create the tasks. So it's starting to configure the agents themselves to in terms of what gets created.

The the natural extension then is being able to select your your own agents. Right? And so as we expand our pre built library of agents, we envision letting people turn specific agents to towards specific segments of their data. Because I think the art of this here will be figuring out when and how or or which records are worth sending to the agent.

And that that's something that we are gonna own it in the beginning to to help make you guys as successful as possible. But over time, we want to enable configuration so that our customers can can leverage agents in the way that makes the most sense to them for the data that they care the most about.

Alright. One more.

Sorry. These are coming in in real time. From Tiffany, if a user has access to only some of the data for that golden let's say there are top secret elements, but they're u but the user only has access to confidential information, how would Tamer display that information? Is it blurred out?

Yeah. Anthony, it looks like you maybe answered this, but the short answer is yes, Tiffany. So you can mark any field as what we call a sensitive field, call it top secret.

And if a field is marked as that, it's blurred out for viewers who don't have permission to it.

Excellent. Thank you.

Alright. Well, that sums up all of our questions for today. Thank you everyone for joining us, and we hope you found the session valuable. Just a quick reminder, today's webinar will be available on demand. We'll send out a link via email so you can rewatch it or share it with your colleagues.

And then before you go, I'm going to launch a quick survey. We'd, of course, love your feedback so we